|

|

|

| Jim Worthey • Lighting & Color Research • jim@jimworthey.com • 301-977-3551 • 11 Rye Court, Gaithersburg, MD 20878-1901, USA |

|

|||||||

|

| Five Examples for Comparison |

| An Exception that Proves the

Rule: Foveon X3 and Optional Prefilter |

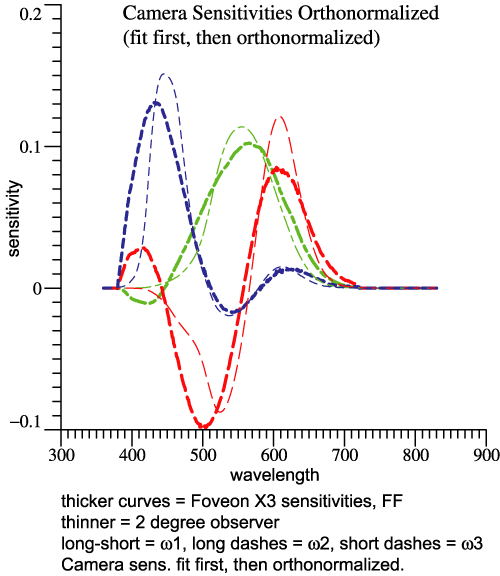

Rcam = RCohen(rgbSens)

CamTemp = Rcam*OrthoBasis

GramSchmidt(CamTemp, CamOmega)rgbSens is a matrix whose

columns are the 3 camera sensor functions (in this case the Foveon

functions) . Rcam is the projection

matrix based on the camera functions. OrthoBasis is the 3

orthonormal

vectors for human, often called Ω . CamTemp

is then the best fit to OrthoBasis

using a linear combination of the camera sensitivities. In general, the

columns of CamTemp

will not be an orthonormal set, so the Gram-Schmidt procedure is used

to orthonormalize them. The orthonormal basis for the camera sensors is

then CamOmega.

That's the main result, and the camera's locus of unit monochromats is

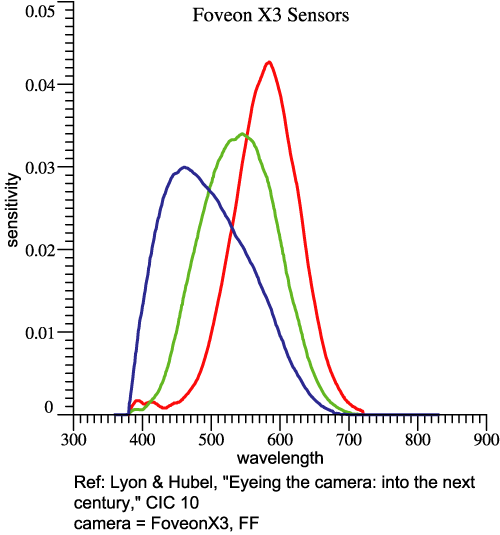

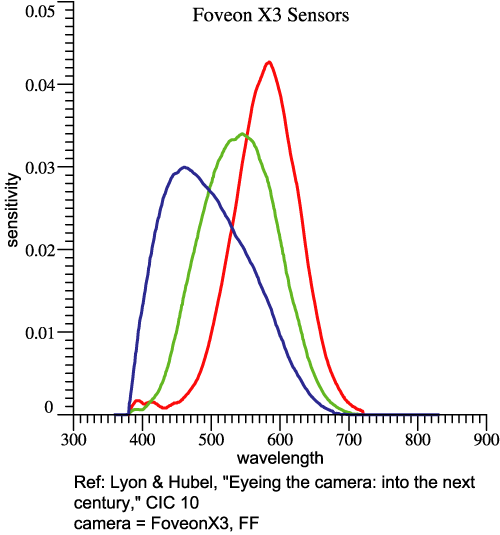

a parametric plot of the 3 columns of CamOmega.| Foveon X3 Sensor without Prefilter |

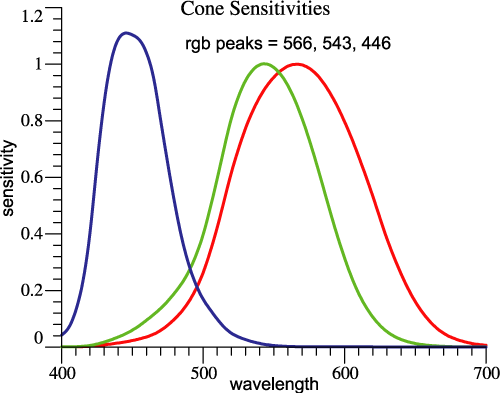

| Human Eye |

Foveon

3-Color Camera Sensor |

|

|

| Native

Orthonormal Basis of Foveon X3 |

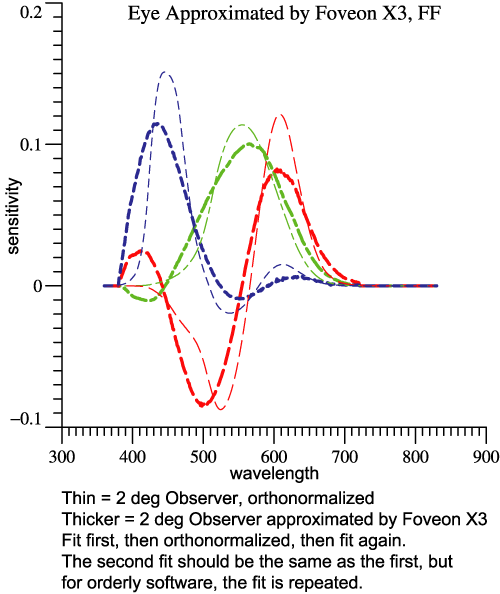

Fit to

Human

Orthonormal Basis by Foveon X3 functions |

|

|

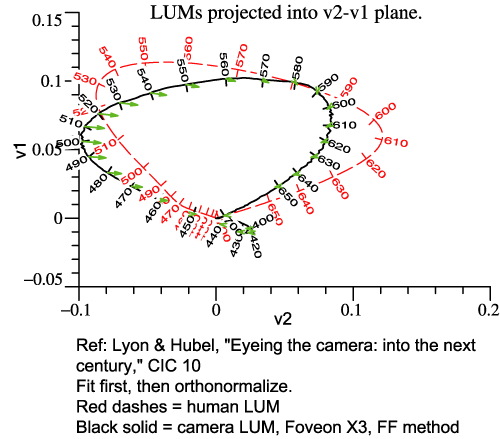

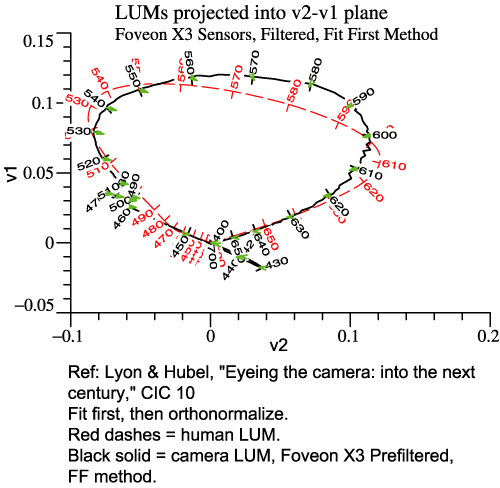

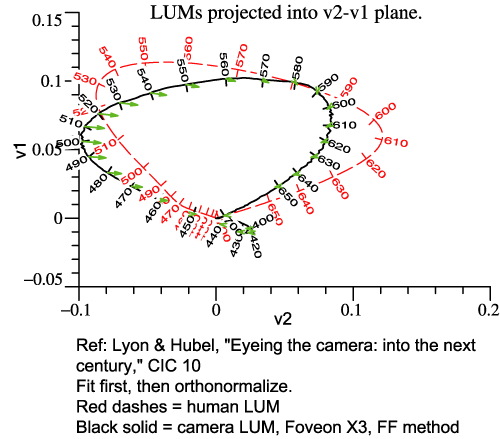

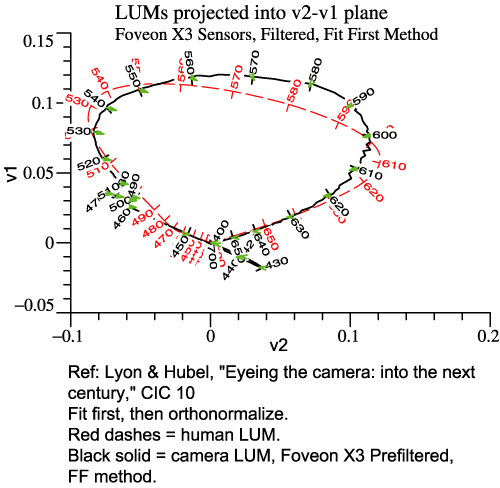

| Red dashed =

human LUM projected into v2-v1 Black solid = same projection of Foveon X3's LUM Heads of green arrows = fit to human by Foveon X3 functions, same projection. |

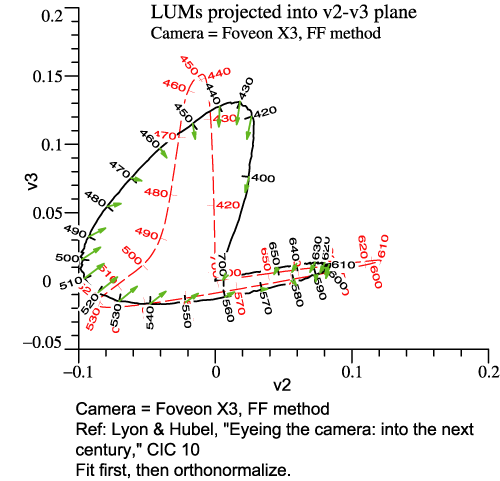

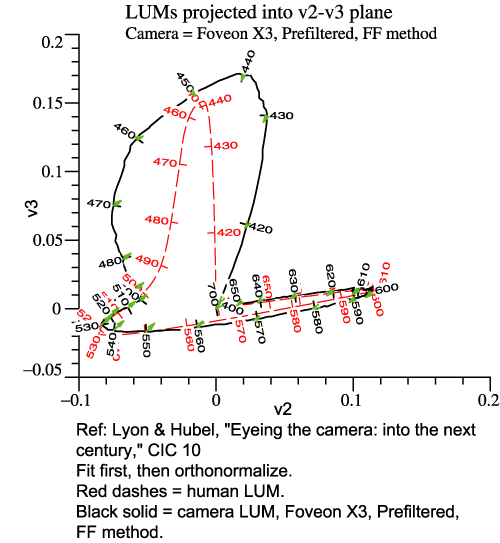

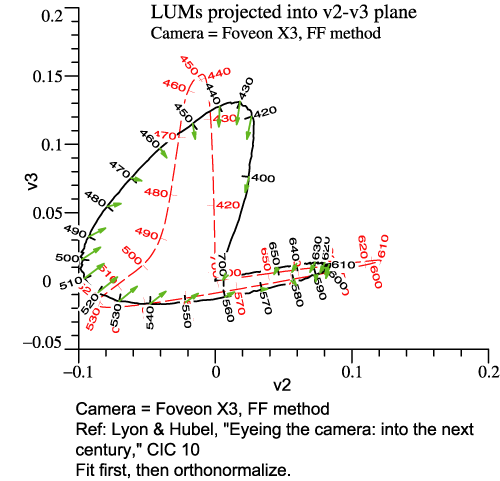

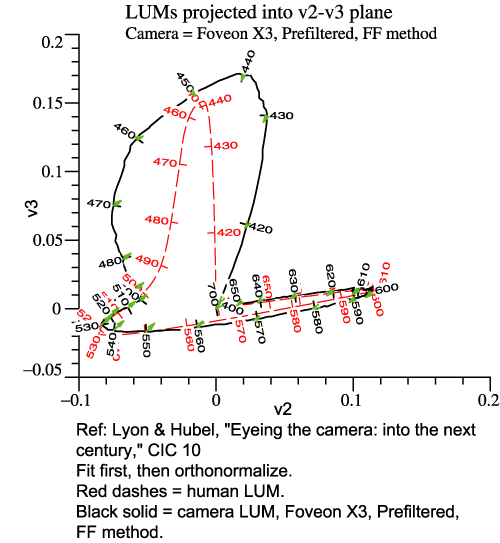

Red dashed = human LUM projected

into v2-v3 Black solid = same projection of Foveon X3's LUM Heads of green arrows = fit to human by Foveon X3 functions, same projection. |

Click to see the LUM for Foveon X3 (without prefilter) graphed in 3 dimensions! |

|

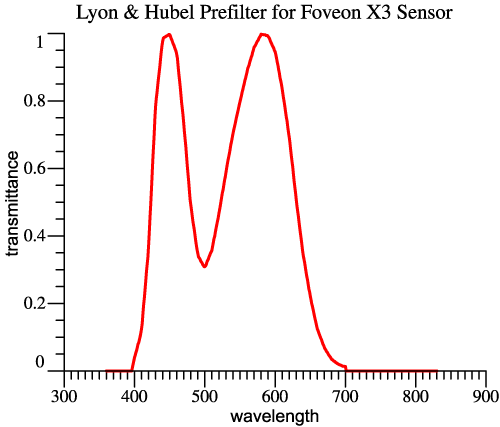

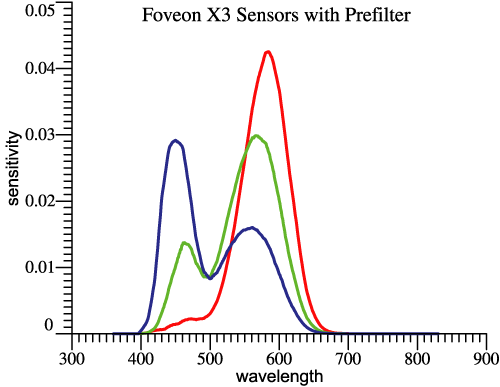

| Foveon X3 Sensor with Prefilter |

| Same Foveon

X3 Sensors

as Before |

This

Prefilter3 Will Narrow the Functions and Reduce Overall Sensitivity in the Blue-Green Region |

|

|

| Foveon X3

Sensors Multiplied by the Prefilter Function3 |

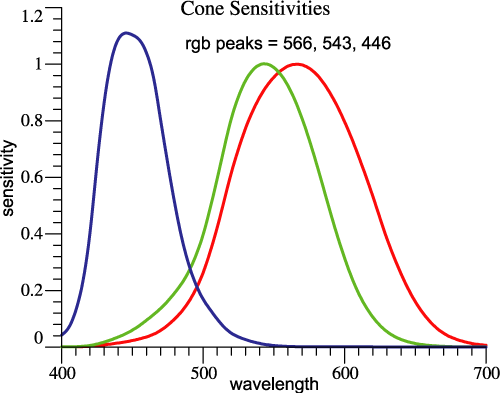

Cone

Sensitivities Consistent with 2º Observer |

|

|

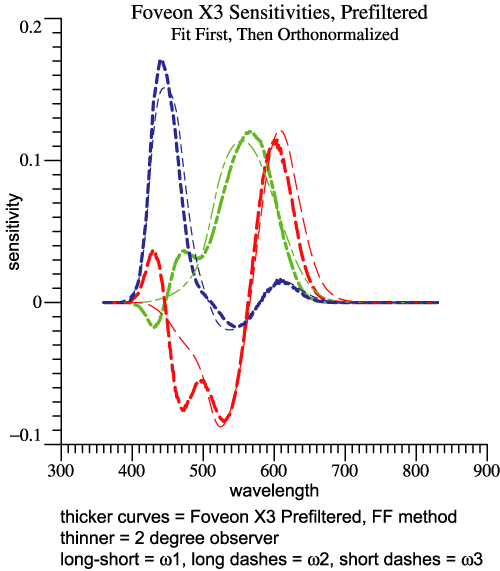

| The thicker curves are the

orthonormal basis for the Foveon X3 sensors with prefilter. The "fit

first" method acts here to give a good alignment of the camera LUM with

human LUM. |

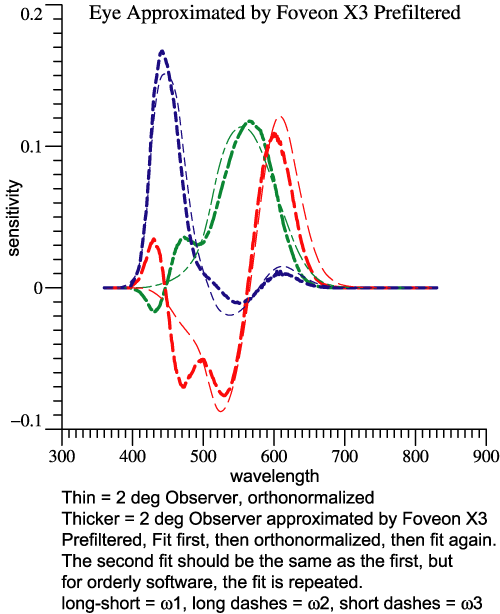

Fit to Human Orthonormal Basis by Pre-filtered Foveon X3 functions. The best fit was found from the orthonormal basis at left. The best fit should come out the same whether the orthonormal basis is derived by the "fit first" method or not. In fact, the fit first orthonormal basis was used. |

|

|

| Red dashed =

human LUM projected into v2-v1 Black solid = same projection of Prefiltered Foveon X3's LUM, found by fit first method. Heads of green arrows = fit to human by Prefiltered Foveon X3 functions, same projection. |

Red dashed =

human LUM projected into v2-v3 Black solid = same projection of Prefiltered Foveon X3's LUM, found by fit first method. Heads of green arrows = fit to human by Prefiltered Foveon X3 functions, same projection. |

Click to see the LUM for Foveon X3 WITH prefilter, graphed in 3 dimensions! |

|

| Foveon X3 Sensor with and without Prefilter |

Click to see the LUM for Foveon X3 (without prefilter) graphed in 3 dimensions! |

|

Click to see the LUM for Foveon X3 with prefilter, graphed in 3 dimensions! |

|

| 2°

observer himself |

Foveon X3,

no filter |

Prefiltered

Foveon X3 |

|||||||||||||||||||||||||||

Y = inv(OrthoBasis'*rgbbar) =

|

Y = inv(CamOmega'*rgbSens) =

|

Y = inv(CamOmega'*rgbSens) =

|

|||||||||||||||||||||||||||

| Column amplitudes = 0.0852 0.409 0.153 |

Column amplitudes = 2.71 9.06 8.40 |

Column amplitudes = 5.89 16.5 11.5 |

| Prime

Color λs |

red |

green |

blue |

| 2° observer | 603 |

538 |

446 |

| Foveon X3 |

587 |

510 |

430 |

| Foveon X3, Prefiltered |

591 |

541 |

442 |

| . |

|