|

|

|

| Jim Worthey • Lighting & Color Research • jim@jimworthey.com • 1-301-977-3551 • 11 Rye Court, Gaithersburg, MD 20878-1901, USA |

|

|||||||

|

|

|||||||

|

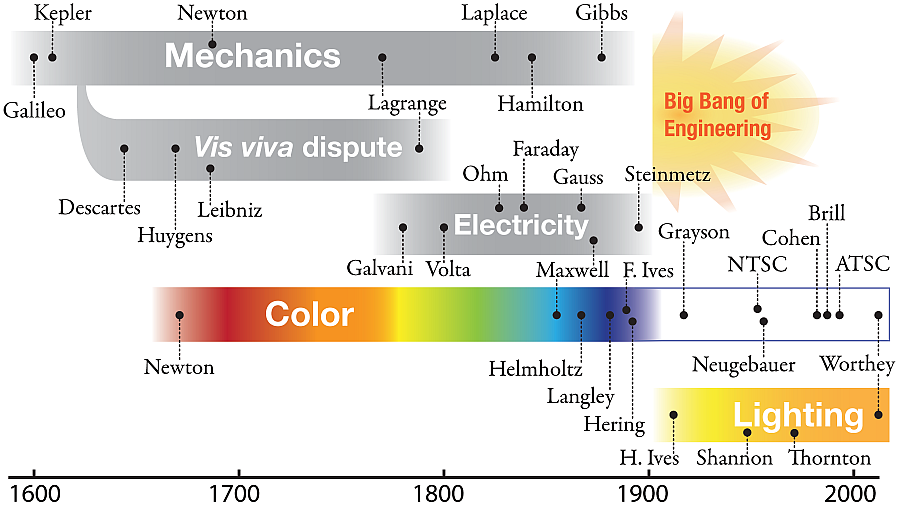

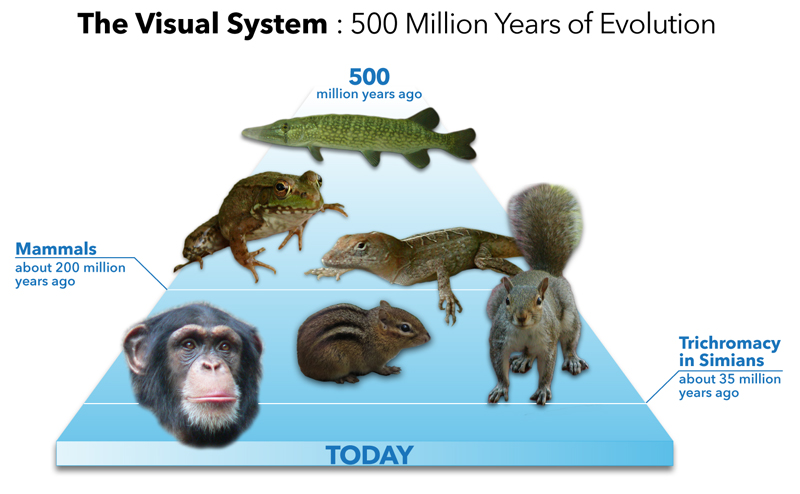

| Timeline of

Science and Lighting |

| "A Mixture of

Monochromatic Yellow and Blue Light" |

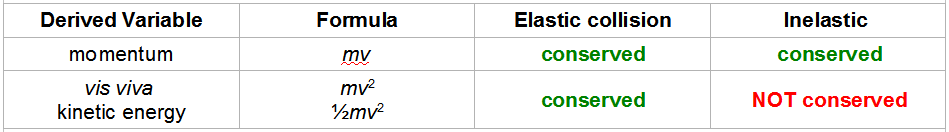

The black lines are Ives’s original drawing, while the orange and blue are added to represent his “mixture of monochromatic yellow and blue light.” Color vision is trichromatic, but monochromatic yellow stimulates two receptor systems because of the large overlap of the red and green sensitivities.

| Ives Took a

Scientific Approach. |

|

|

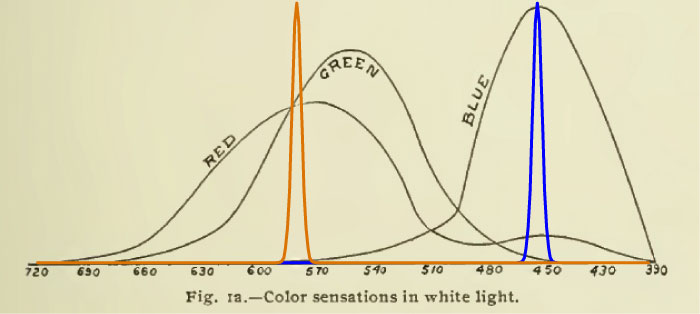

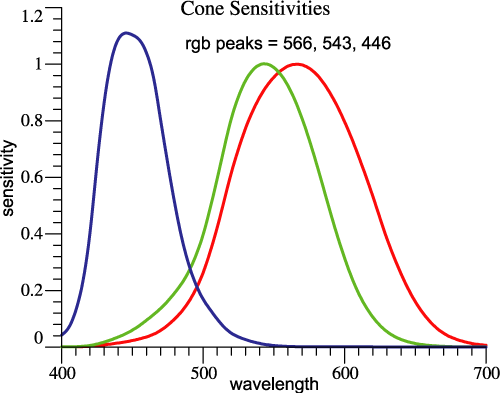

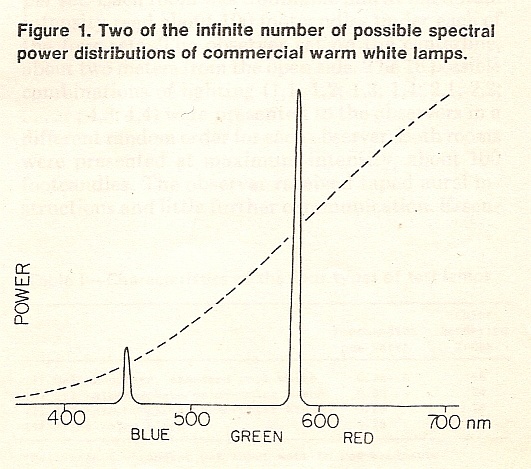

| A modern version of red-green-blue. | Example like Ives's: Papers lose their color when 4002 K blackbody is replaced by a 2-band light of the same chromaticity. |

| 2-bands Issue

Since 1912 |

William A. Thornton 1923-2006 |

A figure from Thornton, William A. and E. Chen, “What is visual clarity?” J. Illum. Eng. Soc. 7(2):85-94 (January 1978). |

In the 1970s, Bill Thornton studied the idea of 3-band lamps. He re-discovered Herbert Ives's idea, figure at left. |

In the 1980s and since, Worthey has expanded on the two-bands idea, noting that many commercial lights shrink red-green contrasts among objects. See figure above. |

| Why Is it Hard

to Discuss Vision and Lighting? |

The normal process of seeing is

effortless. Talking about it is harder.

| Vectorial Color Overview (part 1) |

|

|

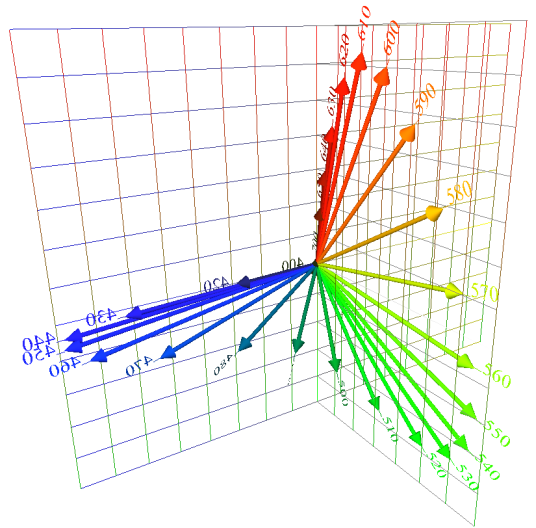

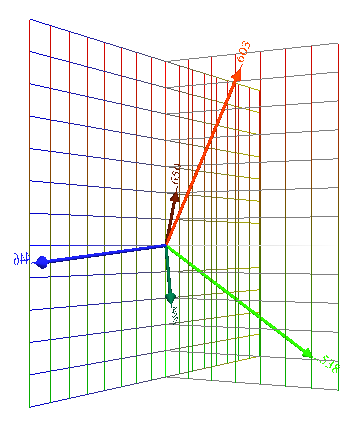

| Narrow-band

lights of equal power map to vectors with different

amplitudes and directions. |

Colored

lights add vectorially. In this figure, equal-power

components add to make the so-called Equal Energy

Light. |

| Vectorial

Color Overview

(part 2) |

|

|

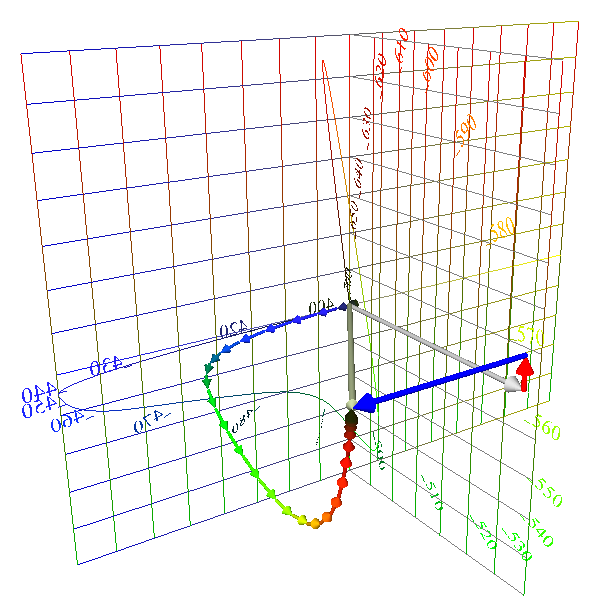

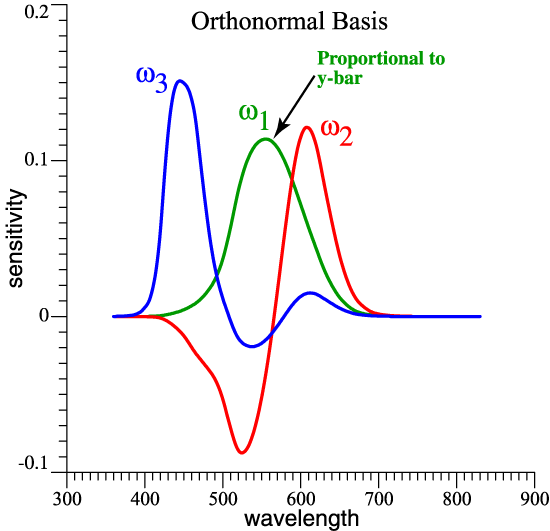

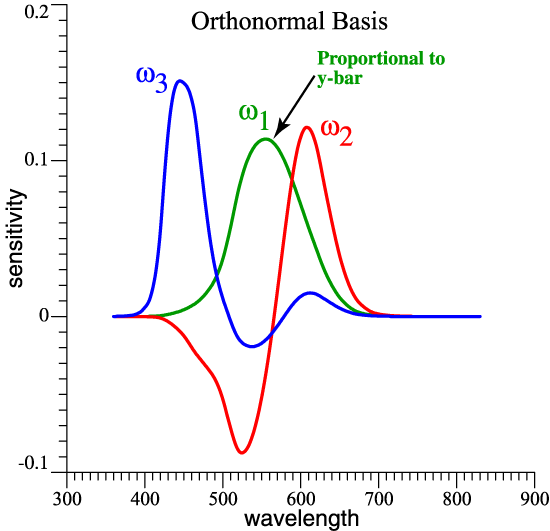

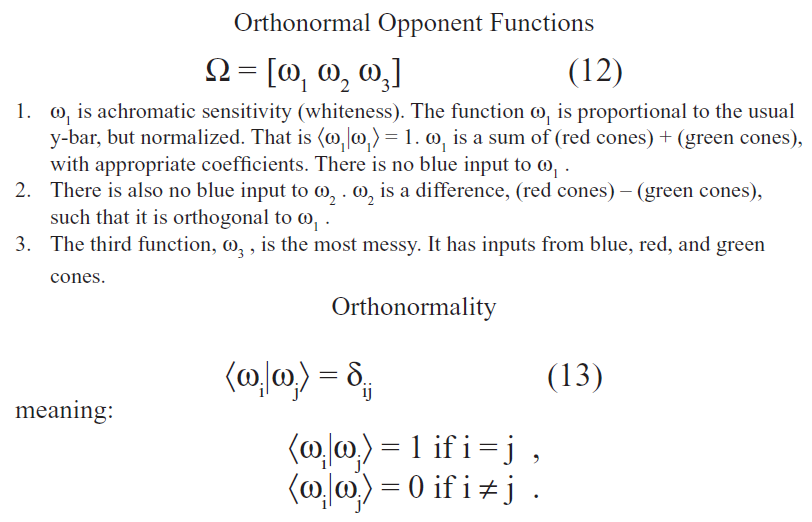

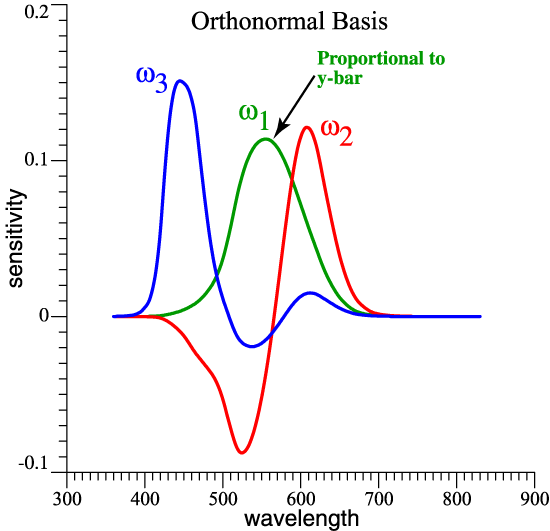

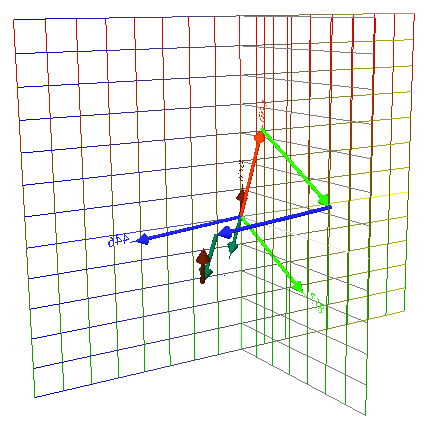

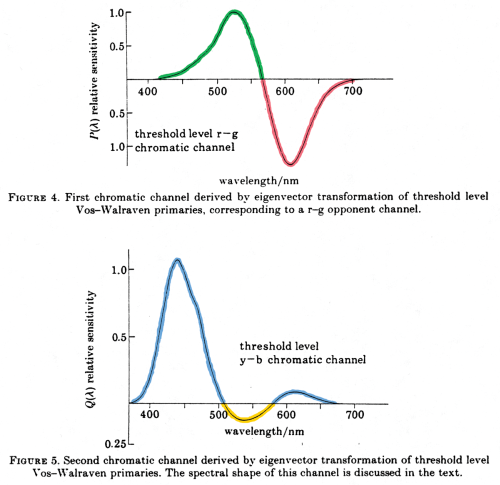

| Starting with a light

(an SPD), to find its 3-vector, we need these

Orthonormal Opponent Color Matching Functions. |

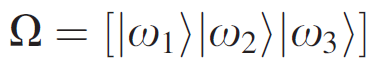

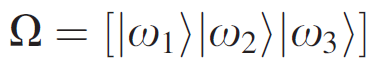

For convenience, the functions ω1, ω2, ω3 become the columns of matrix Ω . |

| Vectorial Color

Overview (part 3) |

|

Jozef

B. Cohen

1921-1995 |

| Legacy

Understanding |

|

Fictitious but realistic color-matching data. |

|

|

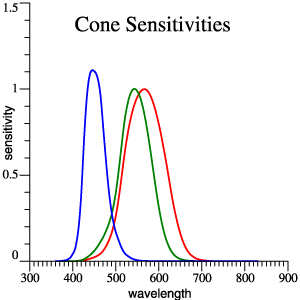

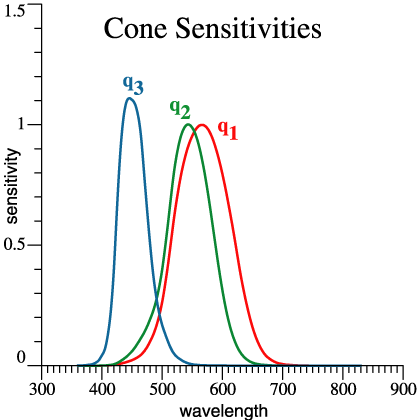

Cones,

red,

green

and blue. |

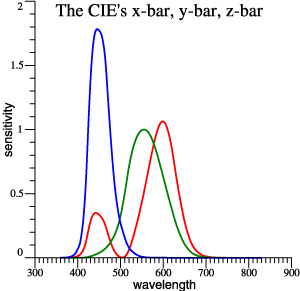

| Traditional

x-bar,

y-bar, z-bar |

|

|

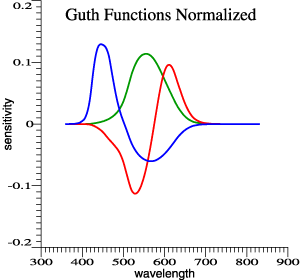

Guth's 1980

model, normalized. Achromatic function is proportional

to y-bar. |

|

Linear

transformations of color-matching data predict the

same matches.

This is Figure 1. < The only numbered figure! > |

|||

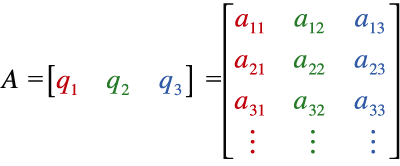

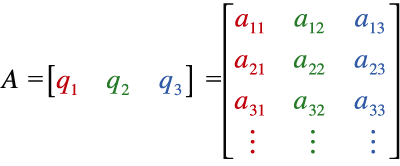

| 3 functions become

the columns of a matrix: |

|

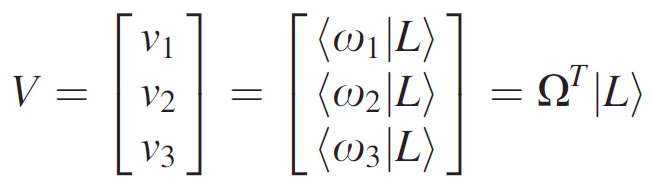

(Eq. 1) |

| A set of

color matching functions, CMFs, become the columns

of a matrix A. Or, if you like, the columns of A are a set of linearly independent functions, usually 3 functions. |

|

| SPD as a Column

Vector |

| The

spectral

power distribution of any light can be written as a

column vector L1.

It is then summarized by a tristimulus vector V, V = AT L1

. (2)

Light L1 is a color match for light L2 if AT L1 = AT L2

. (3)

Refer again to the Figure 1. If Eq. (3) holds for one set of CMFs A, then it will hold for the other sets. That much is standard teaching. |

|

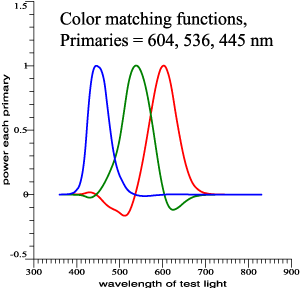

| Color Mixing Experiments: Choice of

3 λs . |

| Strongly Acting Wavelengths |

| Some

Credit to Tom N. Cornsweet |

|

In his 1970 Book, Tom Cornsweet used vector diagrams to

discuss overlapping receptor sensitivities.

|

| Jozef Cohen and the Fundamental Metamer |

Jozef B. Cohen's Book |

|

Cohen

sought an invariant

presentation of color mixing facts.

|

4 Legacy Graphs |

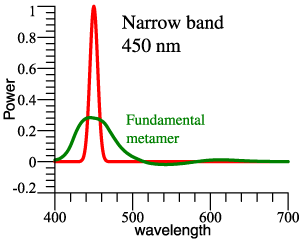

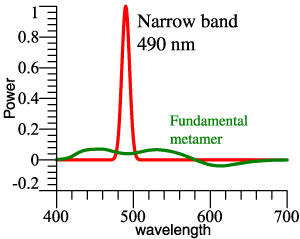

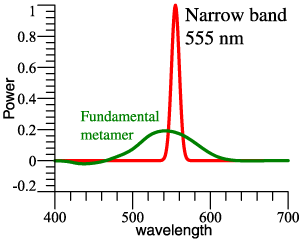

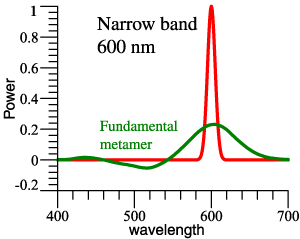

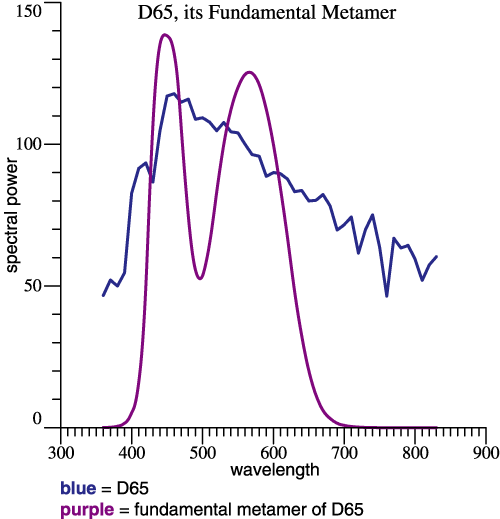

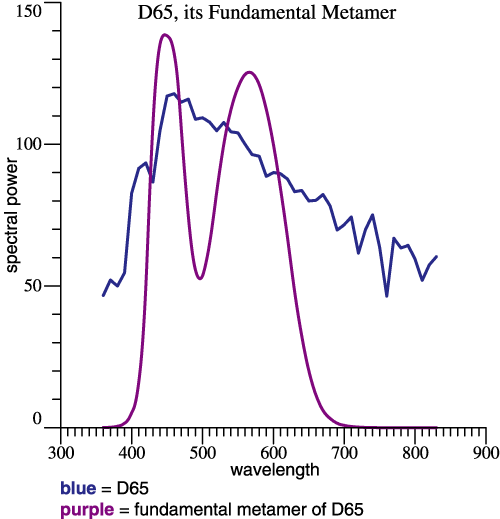

| Examples of

Fundamental Metamers. |

| 4 items:

Fundamental metamer of a narrow band. 1 item: Fundamental metamer of D65. Fundamental metamer is always a smooth curve. |

|

|

|

|

|

| Joe Cohen's

easy method: Matrix R

|

| Cohen needed a way to find a fundamental metamer L* . As before, A is a matrix whose columns are a set of CMFs, | |

|

. (1) |

| Given L and

A, we want to find L* . Now a student

might look up Moore-Penrose

pseudo-inverse and get a numerical answer L*

with that . Lucky for us, Cohen did not have Wikipedia

and solved the problem for himself, which led him to

more ideas. Long story short, Cohen's method: L*

= R L

,

(2)

whereR = A(ATA)−1AT

.

(3)

|

|

| Matrix R,

continued. |

|

Fun Facts

about Matrix R

|

| Fundamental

Metamer Example. |

|

At left, the blue curve shows L = D65. The

purple curve is L*,

the fundamental metamer of D65. The two curves are

metamers in the ordinary sense. L*, a linear

combination of CMFs, is found by: L* = RL

. (4)

Eq. (4) has '=' and not '≈' because L* by definition is the least-squares approximation. So that’s Jozef Cohen’s

Highly Original Contribution. Now a few screens above,

We were talking about vectors... |

| Cohen References: Cohen, Jozef, “Dependency of the spectral reflectance curves of the Munsell color chips,” Psychonom. Sci. 1, 369-370 (1964). Cohen, Jozef, Visual Color and Color Mixture: The Fundamental Color Space, University of Illinois Press, Champaign, Illinois, 2001, 248 pp. <2014 update: book is out of print. Publisher has allowed a version to be posted on Google Books. Check it out! > |

|

| Orthonormal

Opponent Color Matching Functions. |

| Orthonormal

Opponent Color Matching Functions: Description. |

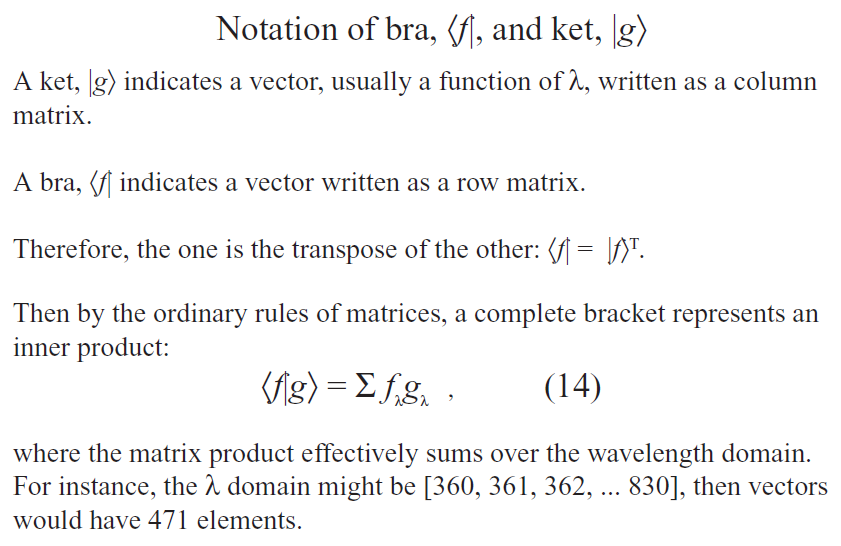

| Bra

and

Ket Notation |

| Working Class

Summary |

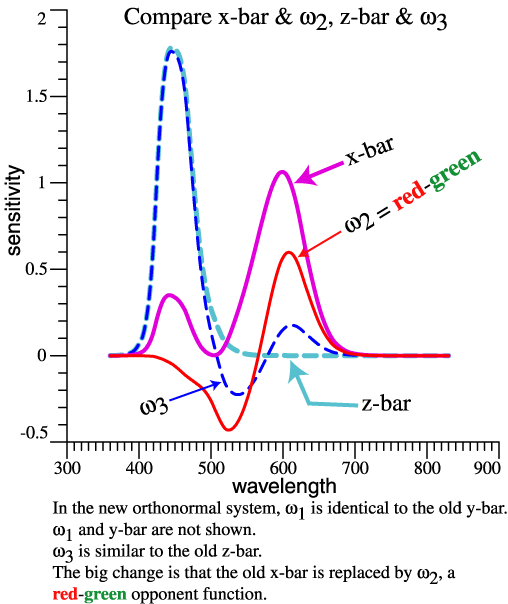

| Although many clever

ideas (from Cohen, Guth, Thornton, Buchsbaum, etc.)

guided its development, in the end the orthonormal

basis is not radically new: |

|

|

|

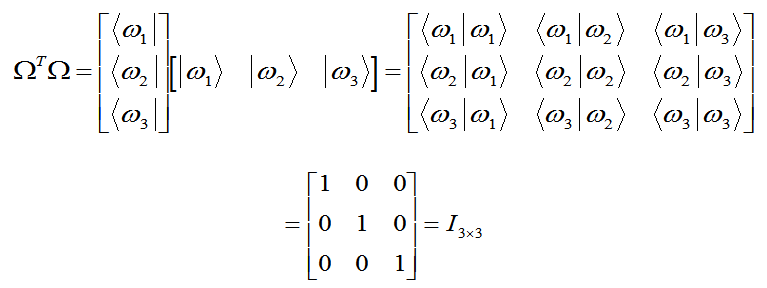

| Fun with Matrices |

|

(15) |

| Convenience of the

Orthonormal Basis |

| Recall from a

few screens above: |

|

Given light L, find its 3-vector V by a matrix multiplication. Ω is known and constant. |

| Starting with a light (an

SPD), to find its 3-vector, we need these Orthonormal

Opponent Color Matching Functions. |

For convenience, the functions ω1, ω2, ω3 become the columns of matrix Ω . |

| Meaning of

Tristimulus Values |

| v1

= whiteness = achromatic

or black-white dimension v2 = redness or greenness v3 = blueness or yellowness So, we can say that the tristimulus values have intuitive meaning. But that's not all. |L*〉 = v1|ω1〉

+ v2|ω2〉 + v3|ω3〉

If we express the

fundamental metamer L* by an orthonormal

function expansion, the tristimulus values are the

coefficients. The same

values have mathematical

meaning. |

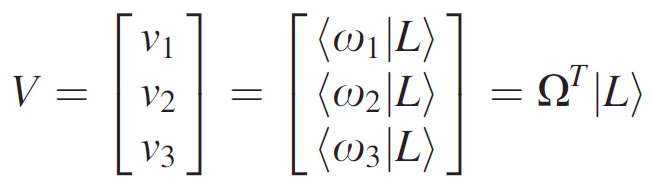

| Graphing a Vector |

|

So, we can calculate one vector from the

origin. |

| 5 Vectors |

|

Or five vectors from the origin. |

| 5 Vectors Added |

|

Or add some vectors vectorially. That is they add tail to head. |

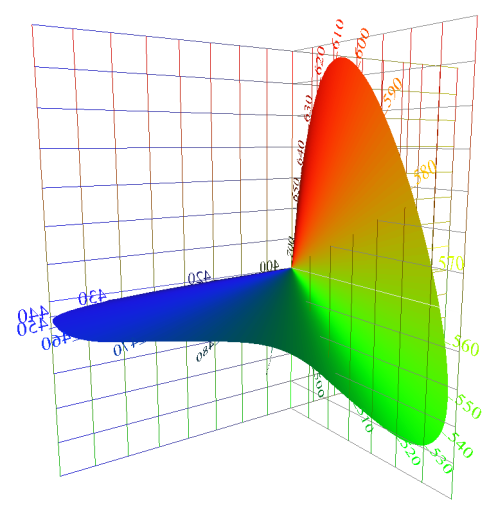

| Locus of Unit

Monochromats |

| Locus of Unit

Monochromats (continued) |

|

I sometimes show the Locus of Unit Monochromats (LUM) by a surface. The locus is really the curve along the edge of the surface. |

| Wavelengths of

Strong Action |

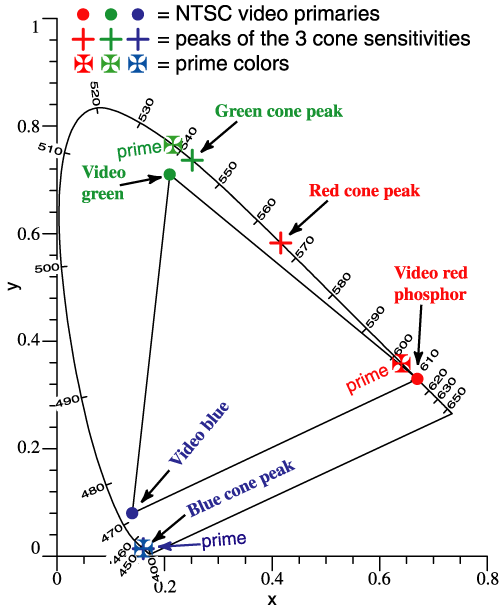

| The NTSC phosphors are approximately at Thornton’s Prime Colors, 603, 538, 446 nm. | |||||||||||||||||||||||||||||

For practical purposes, the wavelengths of the longest vectors are the same as the prime colors, and about the same as the NTSC phosphors. |

|

||||||||||||||||||||||||||||

| Composition of a White Light |

|

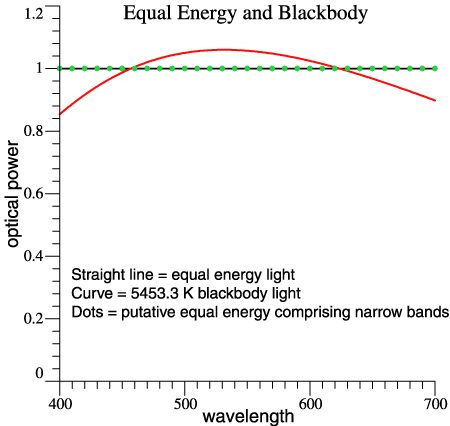

The so-called "equal energy light" is one that has constant power per unit wavelength across the spectrum, indicated by a solid black line in the figure at the right. The straight-line spectrum is similar to a more realistic light, 5453 K blackbody (or 5500 if you like). Now assume an equal-energy light that packs all of its power at the 10-nm points, 400, 410, ... , shown by the green dots. |

|

| Composition of a White Light

(continued) |

| Opponent Colors

and Information Transmission |

| In 1983,

Buchsbaum and Gottschalk derived an opponent-color

system to optimize information transmission. They

started with cone functions and got a result like the

orthonormal basis Ω . It is perhaps intuitive that Ω is helpful for engineering work because redundancy is taken out. The Buchsbaum and Gottschalk result supports the use of Ω for image compression and propagation-of-errors, for example. [Gershon Buchsbaum and A. Gottschalk, “Trichromacy, opponent colours coding and optimum colour information transmission in the retina,” Proc. R. Soc. Lond. B 220, 89-113 (1983).] |

|

| Some Lights

Have Less Red and Less Green |

|

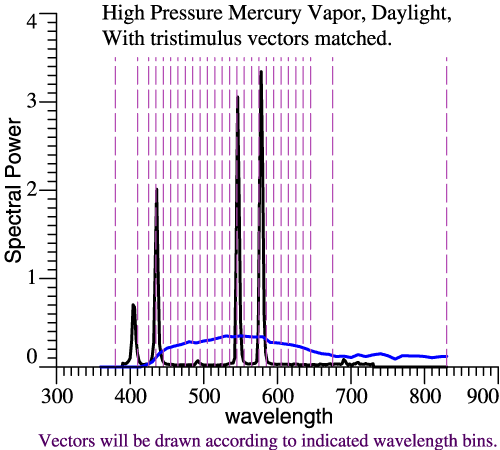

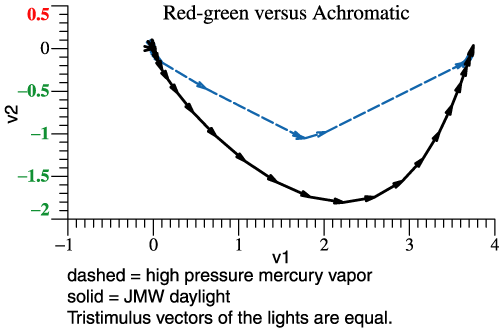

A white light has net redness or

greenness that is small or zero. The same white point

can be reached by different lights in different ways. SPDs of 2 lights are plotted at right. The black line is High Pressure Mercury Vapor light, while the blue is JMW Daylight, adjusted to have the same tristimulus vector. (Yes, that means they are matched for illuminance and chromaticity.) The wavelength domain is chopped according to the dashed vertical lines. The wavelength bands are 10 nm, except at the ends of the spectrum, with most bands centered at multiples of 10 nm. If one light then multiplies the columns of Ω, those products could be graphed as a distorted LUM, but we skip that step. |

|

| Instead, the color composition of each light will be graphed as a chain of vectors. | |

| Comparing Color

Composition of Lights |

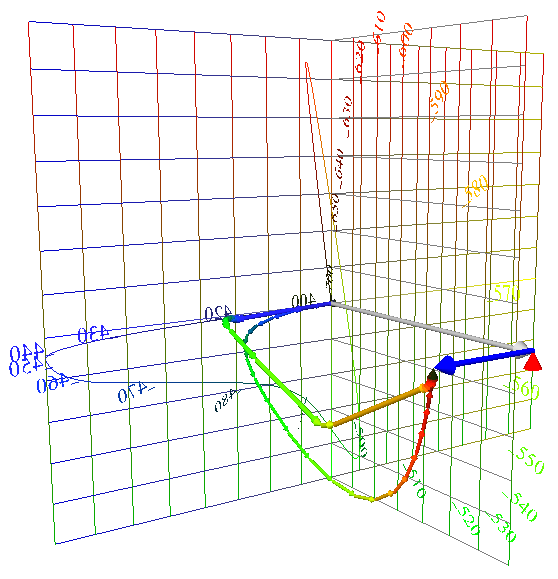

| Now the same two lights are

compared in their vector composition. The smooth chain

of thin arrows shows the composition of daylight.

Slightly thicker arrows show the mercury light. The mercury light radiates most of its power in a few narrow bands, leading to a few long arrows that leap toward the final white point. Compared to “natural daylight,” the mercury light makes a smaller swing towards green, and a smaller swing back towards red. Such a light would leave the red pepper starved for red light with which to express its redness.  |

|

| Above two lights are compared by

the narrow-band components of their tristimulus

vectors. At right the same information is shown, but

projected into the v1-v2

plane. The loss of red-green contrast is the main

issue with lights of “poor color rendering,” and that

shows up in this flat graph. If you were really designing lights, you might use the v1-v2 projection as a main tool. You might want to add wavelength labels to the vectors. |

|

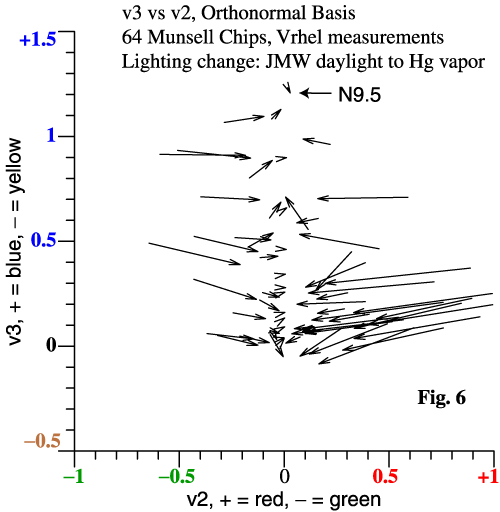

| Other interesting data can be

plotted in Cohen’s space. Suppose that the 64 Munsell

chips from Vrhel et al. are illuminated first by

daylight and then by the mercury light. Since the

mercury light lacks red and green, we expect it to

create a general loss of red-green contrast among the

64 chips. The graph at right is a projection into the v2-v3 plane. Each arrow tail is the tristimulus vector of a paint chip under daylight. The arrowhead is the same chip under the mercury light. The lightest neutral paper is N9.5, and is a proxy for the lights. Notice that 3-vectors projected into a plane still have the properties of vectors in the 2D plane. As expected, red and green paint chips suffer a tremendous crash towards neutral. [Michael J Vrhel, Ron Gershon, LS Iwan, "Measurement and analysis of object reflectance spectra.," Color Res. Appl. 1994;19:4–9.] |

|

| Actual neutral

papers appear as arrows of zero length. For an alternate presentation about comparing lights, please see “How White Light Works,” and the related graphical material. |

|

| Cameras and the

"Maxwell-Ives Criterion" |

| One Stumbling

Block |

| Compare Camera

LUM to that of Human |

| The Fit First

Method |

| Conceptually, the

camera’s LUM (spheres) is more fundamental than the

fit to the human LUM (arrowheads). The trick of the

Fit First Method is to find the best fit first, then

find the LUM from that. Here is the computer code: Rcam = RCohen(rgbSens) # 1

CamTemp = Rcam*OrthoBasis # 2 GramSchmidt(CamTemp, CamOmega) # 3 The camera’s 3 λ sensitivities are stored as the columns of array rgbSens . Because of the invariance of projection matrix R, it doesn’t matter how the functions are normalized, or whether they are actually in sequence r, g, b. Statement 1 finds Rcam, the projection matrix R for the camera. RCohen() is a small function, but conceptually, RCohen(A) =

A*inv(A’*A)*A’ . (7)

In other words, step 1 applies Cohen’s formula for the projection matrix. Then Rcam is the projection matrix for the camera. In step 2, the columns of OrthoBasis are the human orthonormal basis, Ω . The matrix product Rcam*OrthoBasis finds the projection of the human basis into the vector space of the camera. But, the wording about projection is another way of saying that step 2 finds the best fit to each ωi by a linear combination of the camera functions. So, step 2 is the “fit” step. (More programming specifics.) |

|

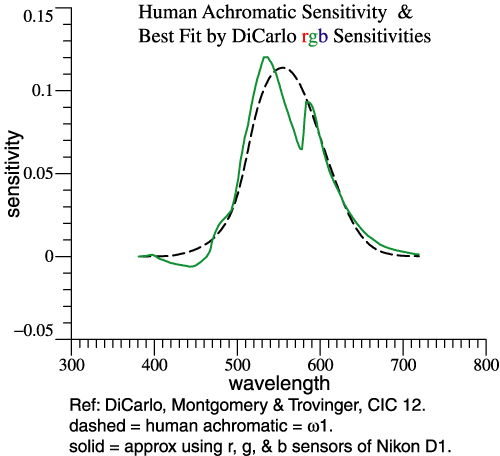

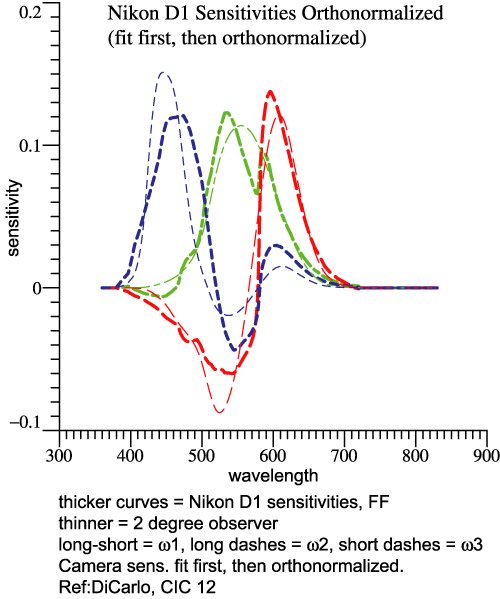

| Step 2 does 3 fits at

once, but let's look at just one. At right, the dashed

curve is ω1, the human achromatic function.

The camera in question happens to be a Nikon D1. The

solid curve is a linear combination of that camera’s

r, g, and b functions that is the least-squares best

fit to ω1. There would be other ways to

solve the curve-fitting problem, but projection matrix

R is

convenient. A best fit is found for each ωj

separately. The resulting re-mixed camera functions

are not an

orthonormal set. |

|

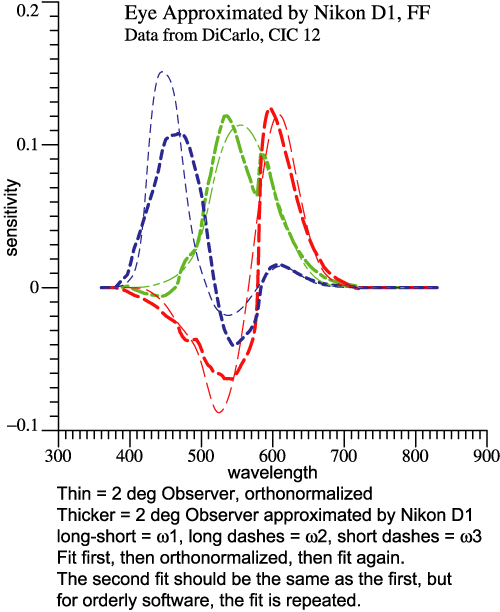

| Step 3,

Orthonormalize the Re-mixed Camera Functions |

|

| Since

the

re-mixed camera functions are computed separately,

they are not orthonormal and would not combine to map

out a true Locus of Unit Monochromats. But they mimic

Ω

and are in the right sequence. We need to make them

orthonormal, which is what the Gram-Schmidt method

does, Step 3. |

|

|

|

|

The two sets of graphs above look similar. But

the one on the left shows the set of “fit” functions.

The one on the right shows the orthonormal basis of

the Nikon D1. The thinner curves pertain to the 2°

observer, the thicker ones to the camera. Why Does

it Matter?

When you have the orthonormal basis, for the

eye or for a camera, you can

do many things with it. Combining the 3

functions generates the Locus of Unit Monochromats.

The orthonormal property leads to some simple

derivations. On the Q&A page, see "Can

we have fun with orthonormal functions?" |

|

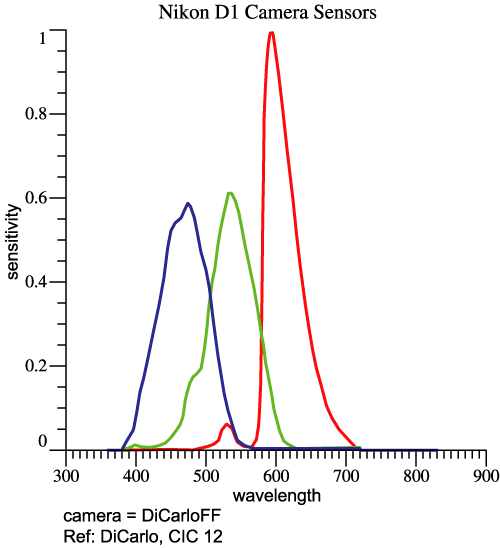

| Camera Example,

Nikon D1 |

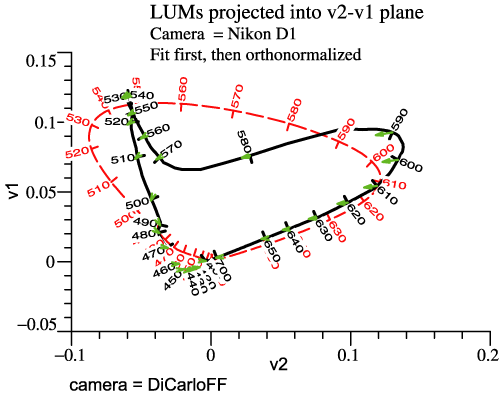

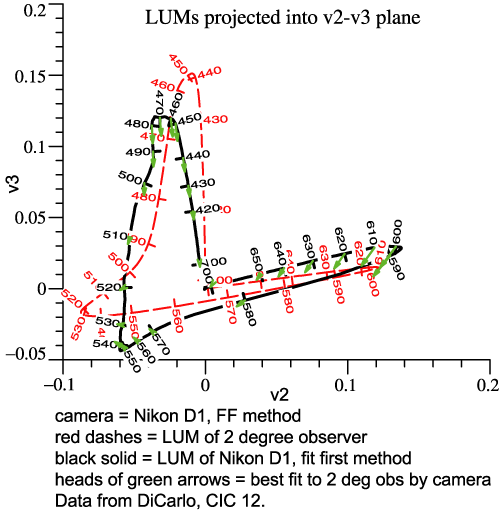

| The last 4 graphs above

pertained to the Nikon D1, based on data from CIC

12. At right are the camera’s red, green, and blue

sensitivities. The camera’s LUM can be compared to the eye’s. Rather than another perspective picture, we now view the LUMs in orthographic projection (2 graphs below). The dashed curves are the human locus. The solid curves are the camera’s locus, while the tips of the small green arrows are points on the best-fit sensitivity function. |

|

|

|

| Now you may say “These curves mean

nothing to me!” That may be true at first, but the

graphs contain a complete description of the camera

sensor, with no hidden assumptions, and no

information discarded. Finding Some Meaning

in the Camera's LUM

Consider the left-hand graph, “LUMs projected

into v2-v1 plane.” Only the red and green receptors

contribute to the human LUM in this view, and v1 is

the achromatic dimension for human, based on good

old y-bar. In this plane at least, the particular

camera tends to confuse wavelengths in the interval

510 to 560 nm, which are nicely spread out as

stimuli for human. Yellows, say 560 to 580 nm, have

lower whiteness than they would for human. The

camera has other differences from human that may be

harder to verbalize. To the extent that finished

photos look wrong, one could revisit these graphs

for insight.More Examples

Five detailed examples were prepared in 2006,

and they are linked from the further

examples page. For instance, Quan’s optimal

sensor set indeed looks good in any of the graphical

comparisons to 2°

observer. (See http://www.cis.rit.edu/mcsl/research/PDFs/Quan.pdf

.) |

|

| Something

Completely Different: a 4-band Array Nominal

time: 3:08 pm

|

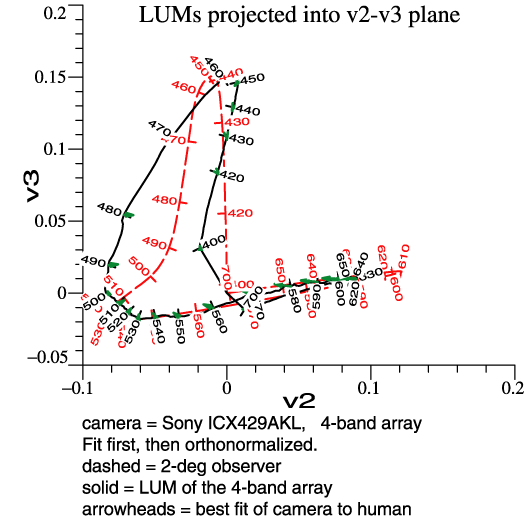

| Sony publishes a

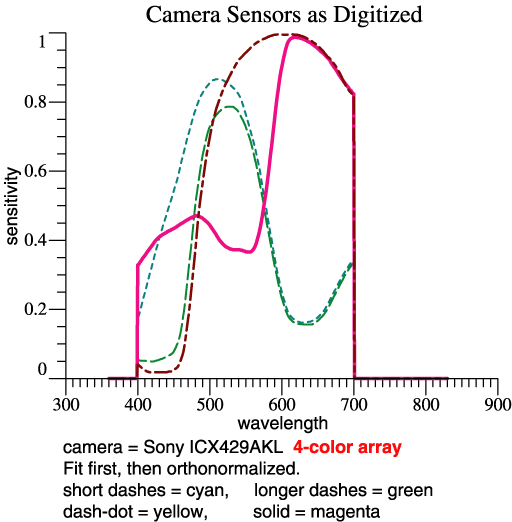

specification for a 4-band sensor array, the

ICX429AKL. I’m not sure of the intended application,

but it could potentially be applied in a normal

trichromatic camera. The Fit First Method readily

fits the 4 sensors to the 3-function orthonormal

basis. |

|

| The four sensitivities are seen

at right. The key steps look the same: Rcam

= RCohen(rgbSens)

. Recall that

the projection matrix Rcam is a

big square matrix of dimension N×N, where N is the

number of wavelengths, which might be 471. The 4th

sensor adds a column to the array rgbSens, but does

not change the dimensions of the result Rcam. After the key

"fit first" steps, I did have to re-think some

auxiliary calculations because of the 4-column

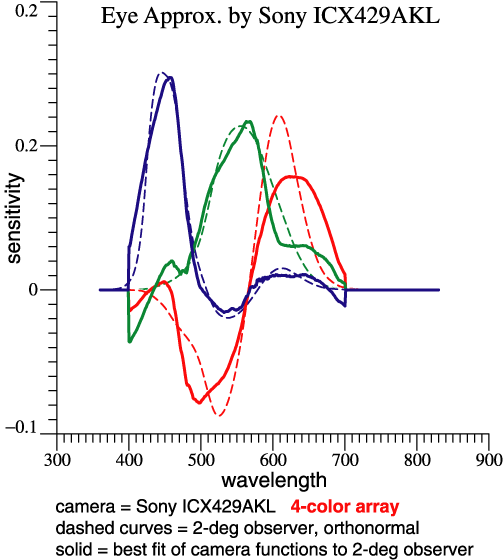

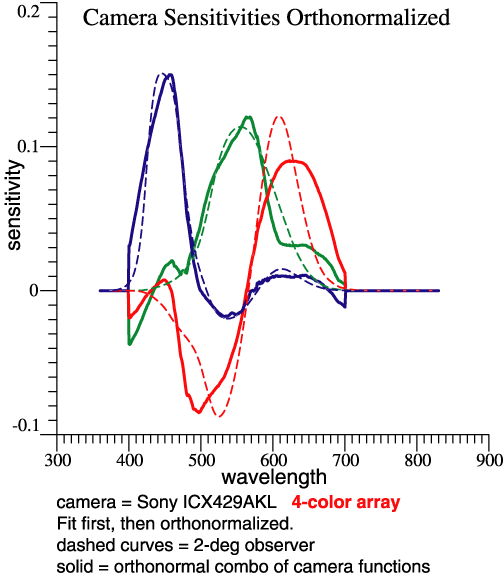

sensor matrix.CamTemp = Rcam*OrthoBasis GramSchmidt(CamTemp, CamOmega) |

|

| The camera's

orthonormal basis Rcam comprises 3 vectors that

are linear combinations of the 4 vectors in

rgbSens. I wanted to find the 4x3 transformation

matrix relating the one to the other, in order to

stimulate thinking about noise. The program output

itself explains the method as follows: Similar

to Eqs. 15-18 in CIC 14 paper,

Transform from sensors to CamOmega: We want to solve CamOmega = rgbSens * Y , where Y is coeffs for 3 lin. combs. MPP = inv(rgbSens'*rgbSens) * rgbSens' Y = MPP*CamOmega = 0.24845 0.13737 0.35971 -0.34663 -0.43708 -0.45585 -0.22433 -0.088434 -0.033503 0.26839 0.22522 0.055984 Column amplitudes = vector lgth of each column = 0.55158 0.51812 0.58433 The columns of rgbSens actually contain the 4 sensitivities, cyan, green, yellow, magenta. MPP is the Moore-Penrose Pseudoinverse. (See Wikipedia and pp. 9-10 in "notes.") Keeping in mind that the sensitivities are all >= 0, matrix Y gives some idea how much subtraction is done to produce the sensor chip’s orthonormal basis. That’s a step toward thinking about noise. Below are the 3 orthonormal functions, and also the 3 best-fit functions made from the 4 camera sensitivities. The only source of “noise” is the errors that I introduced while converting graphs to numbers. It becomes more visible here, after subtractions. |

|

|

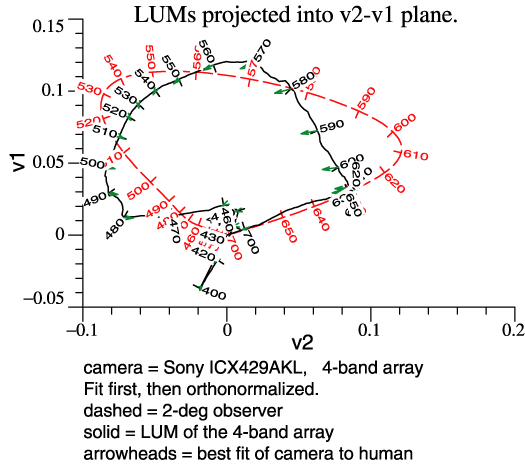

|

|

Some noise also shows up in the projections of the LUM, below. It would appear that color fidelity is not good; reds and oranges may lose some redness. |

|

|

|

| Combining LEDs

to Make a White Light? |

| In

the

1970s, William A. Thornton asked an interesting

question: If you would make a white light from 3

narrow bands, how would the choice of wavelengths

affect vision of object colors under the light? His

research led to the Prime Colors, a set of

wavelengths that reveal colors well. From that

start, he invented 3-band lamps and was named

Inventor of the Year in 1979. He continued his

research and made the definition of prime colors

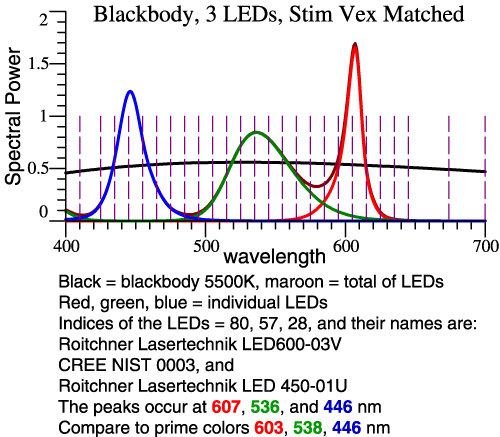

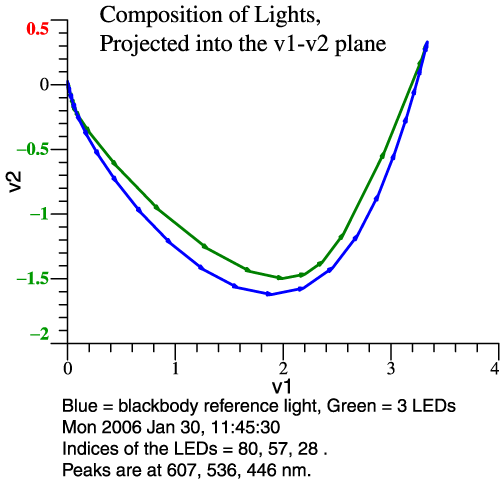

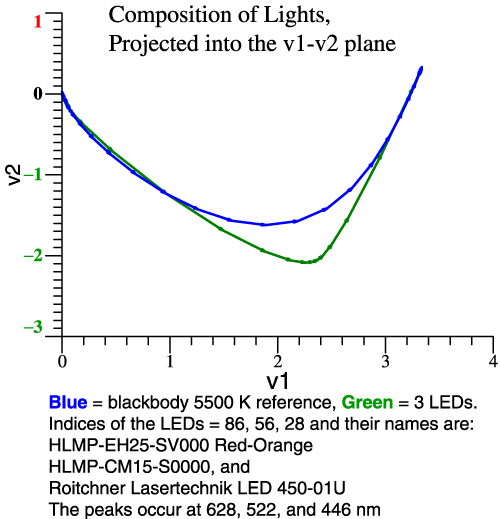

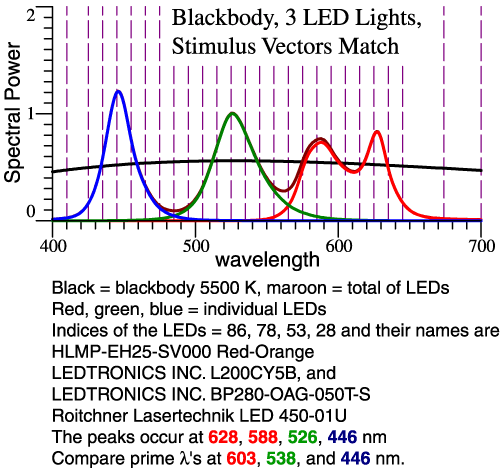

more precise. Problem Statement: For the 2° Observer, Thornton’s Prime Colors are 603, 538, 446 nm. [See CIC 6, and Michael H. Brill and James A. Worthey, "Color Matching Functions When One Primary Wavelength is Changed," Color Research and Application, 32(1):22-24 (2007). Also see Wavelengths of Strong Action, above.] If you would make a light with 3 narrow bands at those wavelengths, the light would tend to enhance red-green contrasts, making some colors more vivid, though it would do a bad job with saturated red objects. You might think then that a white light could be made from 3 LEDs whose SPDs peak at those wavelengths. This idea falls short because LEDs are not narrow-band lights. Our task then is to see what happens when real LEDs are combined, and design a good combination by speedy trial-and-error. We'll see two graphs per example: the LED spectra and their sum, and then the vectorial composition of the LED light in comparison to 5500 K blackbody. Clicking either image gives more detailed information. Example 1: Let LED Peaks ≈ Prime Colors

The reference white is 5500 Kelvin blackbody.

From 119 types measured by Irena Fryc, 3 LEDs are

chosen by their peak wavelengths, as shown at left.

Then we see at right that the blackbody (blue line)

makes a bigger swing to green and back. This LED

combo will dull most reds and greens. |

|

|

|

|

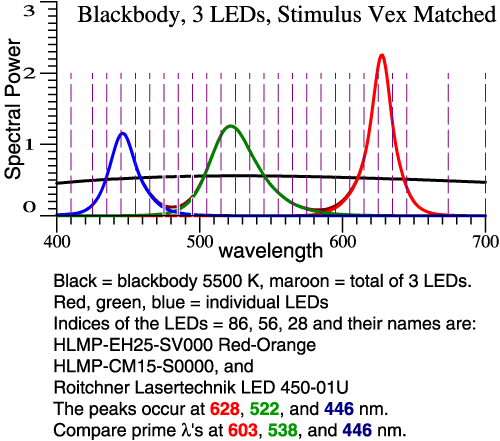

Example 2: Greener Green and

Redder Red

To increase the swing towards green and back,

we let the green be greener (shorter λ)

and the red be redder (longer λ).

In all cases, the LED amplitudes are adjusted so the

total tristimulus vector matches the blackbody.

|

|

|

|

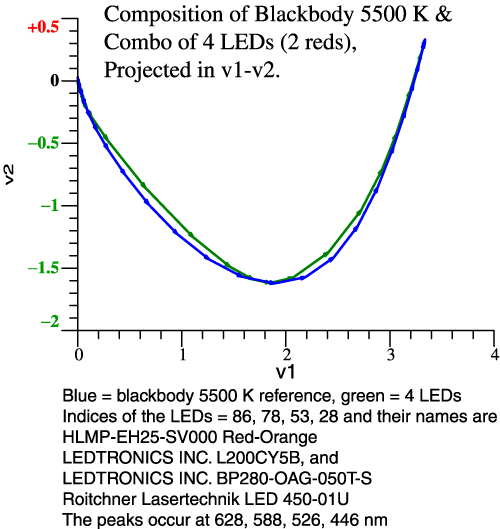

| Still

on example 2, we can see from the vector

composition (right-hand graph) that red-green

contrast will be good, but some colors may be

particularly distorted. Clicking the

left-hand graph confirms that idea in the

color shifts of the 64 Munsell papers. Example 3: Broaden the Red Peak

The problem in example 2 is known to some

lighting experts. The light needs to have a broader

range of reds. The remedy is to use 2 red LEDs. For

simplicity, the proportion of the 2 reds is fixed,

not adjustable. |

|

|

|

| Think of those green and red limit

colors. Some of those colors will still be dulled,

but we are tracking the blackbody pretty close.

Further tweaking is possible. |

|

| Vectorial

Color |

| Invariants |

| Food for Thought ... |

| The End |

|

Please feel free to contact me at any time. I am always eager to discuss lighting, color, cameras etc.

Jim Worthey

|

| Special

Credit |

| Stop |

| Scroll No Farther |

|

Material Below Addresses Obscure Questions |

|

|

|

|